Hello,

I have measured that System.Threading.Timer has a very strange scheduling behaviour on the G400.

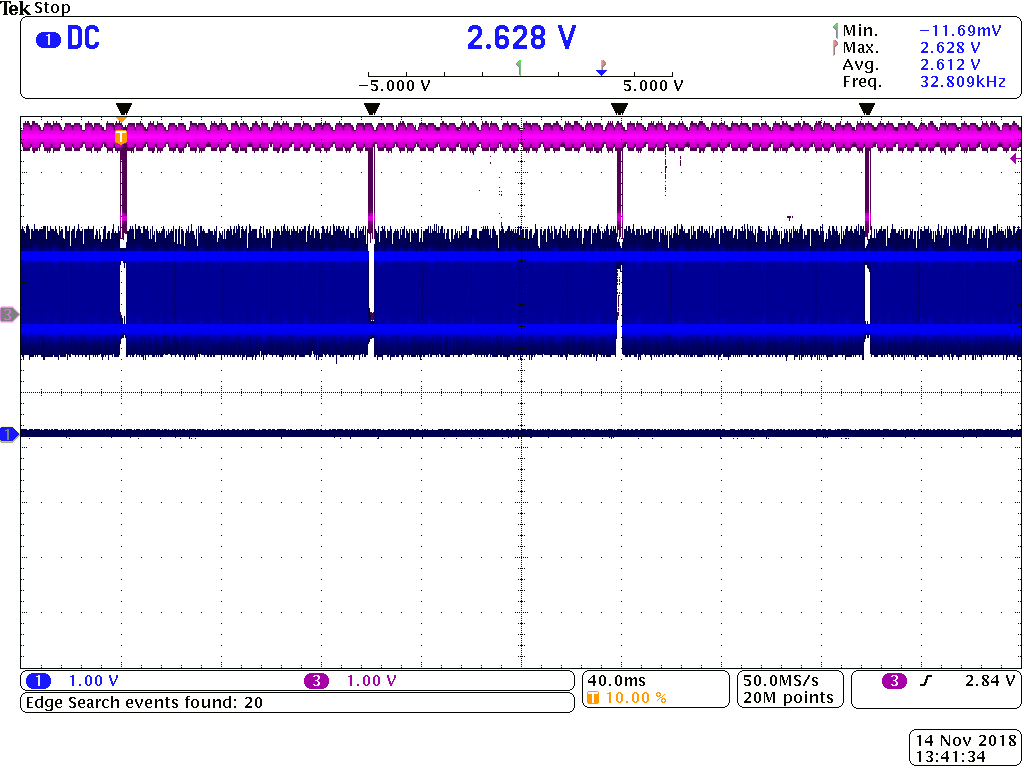

Thread: 100% CPU toggling a GPIO (Blue trace). This lets us see when the scheduler runs, every 20ms (a gap appears)

I start 5 timers, each scheduled for 100ms period, i.e.

T = 100,100,100,100,100

When they fire, they toggle a GPIO one time (Magenta trace)

As expected, every 5 times the scheduler runs, it fires all five timers one after the other:

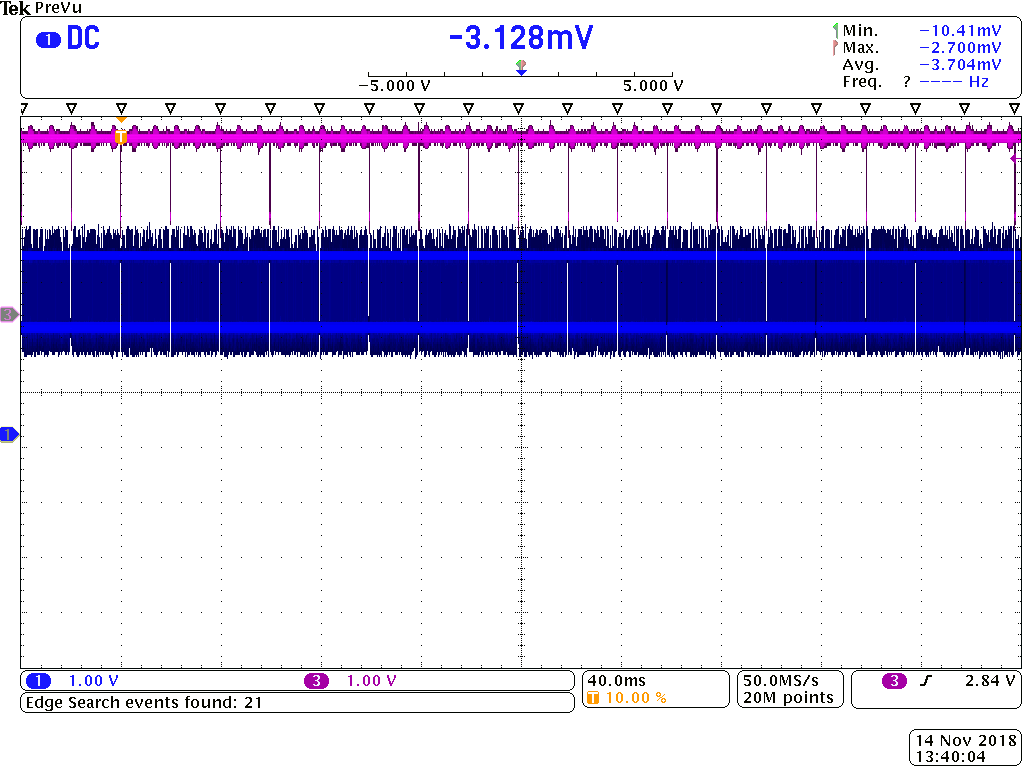

However, this is what happens if I reduce one timer’s period slightly, i.e.

T = 100,100,100,100,80

You can see that the timers are now scheduled exactly 1 timer per scheduling event:

I have tried with various combinations of timers and period, and have found that you CANNOT exceed 1 timer elapsing every 20ms, or your timing is hopelessly lost. In fact, there are events which are silently discarded.

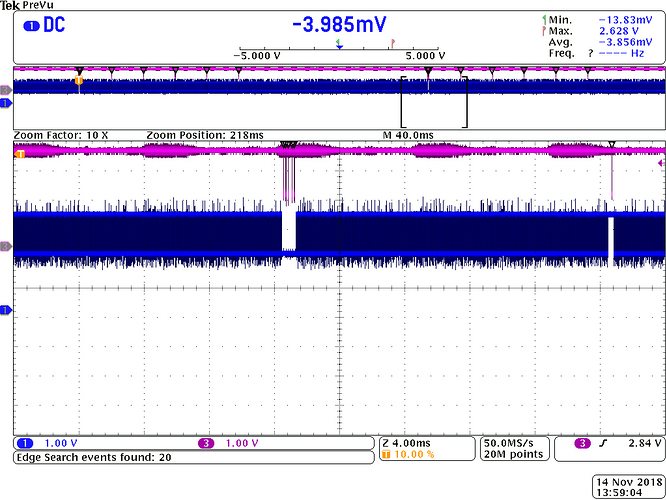

E.g.:

T=40,40 works (0,2,0,2) and 40,20 does not. Should be 1,2,1,2 but is 1,1,1,1 (50% get lost!)

T=60,60,60 (0,0,3,0,0,3) works and 60,60,40 does not. Should be 0,1,2,1,0,3… but is 1,1,1,1

I don’t understand this requirement, it seems really arbitrary that it is willing to fire more than one timer per scheduler event in some cases, but not in others.

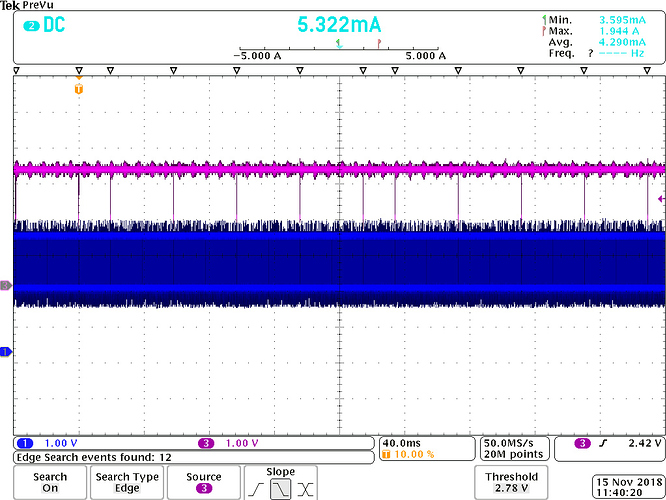

I tried to find the limit, and found that you cannot increase the number of timers indefinitely - in fact 5 timers elapsing seems to be the maximum permitted per scheduling event.

If you try 10 timers (T=200,200,200,200,200) in fact you get 5 simultaneously, then 1 each for the next 5 scheduling events. This is even more bizarre than I might have expected (5 and then 5)

I feel pretty strongly that this is a bug and not a design feature. When I schedule things, I expect my framework to make a best effort to achieve it, instead of silently giving up my timing requirements and firing fewer events than I had requested.

Can I get some insight here please?

Thank you;

William